Abstract

Ubiquitous sensors and smart devices from factories and communities are generating massive amounts of data, and ever-increasing computing power is driving the core of computation and services from the cloud to the edge of the net.

2. Introduction

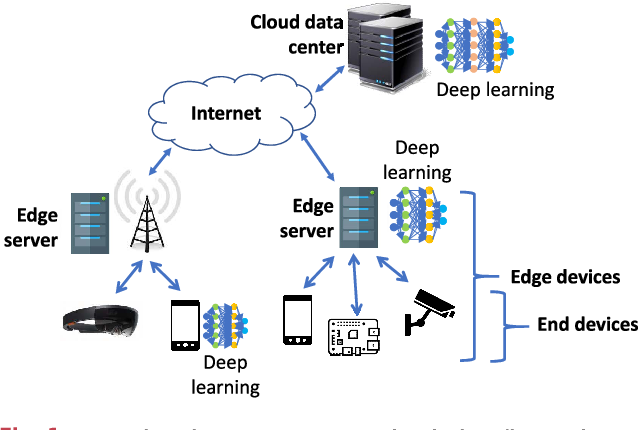

Because of the proliferation of computing and storage devices ranging from server clusters in cloud data centres (the cloud) to personal computer center smartphones, and then to wearable and other Internet of Things (IoT) devices, we have entered an information-centric era in which computing is ubiquitous and computation services are spilling over from the cloud to the edge. A Cisco white paper [1,] predicts that 50 billion IoT devices will be connected to the Internet by 2020. In instance, Cisco estimates that by 202For around 850 Zettabytes (ZB) of data would be generated outside the cloud each year, while global data centre traffic is only 20:6 ZB [2].

This suggests that big data data sources are also changing, shifting from large-scale cloud data centers to a centering diverse spectrum of centers devices. However, existing cloud computing is gradually becoming incapable of managing and analyzing mass-analyzing tributed computer power: 1) a large number of computation tasks must be delivered to the cloud for processing [3], which undoubtedly places significant strain on network capacity and the computing power of cloud computing infrastructures; many new types of applications, such as cooperative autonomous driving, have strict or tight delay requirements that the cloud would struggle to meet due to its remote location [4].

.These achievements are not only derived from the evolution of DL but also inextricably linked to increasing data and computing power.

The advantages include:

1) DL services are deployed close to the requesting users, and the cloud only participates when additional processing is required [12], hence significantly reducing the latency and cost of sending data to the cloud for processing; 2) since the raw data required for DL services is stored locally on the edge or user devices themselves instead of the cloud, protection of user privacy is enhanced; 3) the hierarchical computing architecture provides more reliable DL computation; 4) with richer data and application scenarios, edge computing can promote the pervasive application of DL and realize the prospect of “providing AI for every person and every organization at everywhere” [13]; 5) diversified and valuable DL services can broaden the commercial value of edge computing and accelerate its deployment and growth.

Latency: the delay to access cloud services is generally not guaranteed and might not be short enough to satisfy the requirements of many time-critical applications such as cooperative autonomous driving [11]; • Reliability: most cloud computing applications relie ons onrelyess communications and backborelyworks for connecting users to services, but for many industrial scenarios, intelligent services must be highly reliable, even when network connections are lost; • Privacy: the data required for DL might carry a lot of private information, and privacy issues are critical to areas such as smart home and cities.

Intelligent Edge End Cloud From edge to end Server clusters Edge node Base station End devices Intelligent applications Intelligent services Unleash services to network edge Edge computing network. To be specific, on one hand, edge intelligence is expected to push DL computations from the cloud to the edge as much as possible, thus enabling various distributed, low latency and low-latency intelligent services. Low-latency from these works, this survey focuses on these respects: 1) comprehensively consider deployment issues of DL by edge computing, spanning networking, communication, and computation; 2) investigate DNN DRL DL for Optimizing Edge DL Applications on Edge Edge management Edge for DL Services DL Inference in Edge Inference DL Training at Edge Train Edge support Labels Four Training data Forward Backward Gradients Inference “Four” Edge resources Enable Fig.

Second, to enable and speed up Edge DL services, we focus on a variety of techniques supporting the efficient inference of DL models in edge computing frameworks and networks, called “DL inference in Edge”.

In this case, for the related works leveraging edge computing resources to train various DL models, we classify them as “DL training at Edge”.

Fundamentals of Edge Computing

Edge computing has become an important solution to break the bottleneck of emerging technologies by virtue of byes of reducing data by, improving service latency and easing cloud computing pressure.

Paradigms of Edge, Computing

Mobile Edge Computing places computing capabilities and service environments at the edge of cellular networks. Stations allow users to deploy new applications and services flexibly and quickly.

Standards Institute Definition of Edge Computing Terminologies: The definition and division of edge devices are ambiguous in most literature. 1, we further divide common edge devices into end devices and edge nodes: the «end devices» is used to refer to mobile edge devices and various IoT devices, and the «edge nodes» include In this novel computing paradigm, computation tasks with lower computational intensities, generated by end devices, can be executed directly at the end devices or offloaded to the edge, thus avoiding the delay caused by sending data to the cloud. For a computation-intensive task, will be reasonably segmented and dispatched separately to the end, edge, and cloud for execution, reducing the execution delay of the task while ensuring the accuracy of the results. The focus of this collaborative paradigm is not only the successful completion of tasks but also achieving the optimal balance of equipment energy consumption, server loads, transmission, and execution delays.

Hardware for Edge Computing

Compared to Qualcomm, HiSilicon’s 600 series and 900 series chips do not depend on GPUs. Instead, they incorporate an additional Neural Processing Unit to achieve fast calculation of vectors and matrices, which greatly improves the efficiency of DL. Compared to HiSilicon and Qualcomm, MediaTek’s Helio P60 not only uses GPUs but also introduces an AI Processing Unit to further accelerate neural network computing.

Virtualizing the edge

The requirements of virtualization technology for integrating edge computing and DL reflect in the following aspects:

- The resource of edge computing is limited. Edge computing cannot provide the resources for DL services as the cloud does. Virtualization technologies should maximize resource utilization under the constraints of limited resources; 2) DL services rely heavily on complex software libraries. 8. Specifically, both the computation virtualization and the integration of network virtualization, and management technologies are necessary. In this section, we discuss potential virtualization technologies for the edge.

For example, with the support of NFV/SDN, edge nodes can be efficiently orchestrated and integrated with cloud data centers. On the other hand, both VNFs and Edge DL services can be hosted on a lightweight NFV framework Network Slicing: Network slicing is a form of agile and virtual network architecture, a high-level abstraction of the network that allows multiple network instances to be created on top of a common shared physical infrastructure, each of which optimized for specific services.

3. Fundamentals of deep learning

concerning, NLP, and AI, DL is adopted in a myriad of applications and corroborates its superior performance.

Nonetheless, the edge computing architecture, on account it covers a large number of distributed edge devices, can be utilized to better serve DL. Therefore, the combination of DL and edge computing is not straightforward and requires a comprehensive understanding of DL models and edge computing features for design and deployment.

Neural network in deep learning

Each neuron in RNNs not only receives information from the upper layer but also receives information from the previous channel of its own. In general, RNNs are natural choices for predicting future information or restoring missing parts of sequential data. However, a serious problem with RNNs is the gradient explosion. 7, from the source domain to the target domain to achieve better learning performance in the target domain.

By using TL, existing knowledge learned by a large number of computing resources can be transferred to a new scenario, thus accelerating the training process and reducing model development costs. 7, KD can extract implicit knowledge from a well-trained model, inference of which possess excellent performance but requires high overhead. Then, by designing the structure and objective function of the target DL model, the knowledge is «transferred» to a smaller DL model, so that the significantly reduced target DL model achieves high performance as possible. DRL, Deep Q-Learning uses DNNs to fit action values, successfully mapping high-dimensional input data to actions.

Deep Reinforcement learning

To ensure stable convergence of training, the experience replay method is adopted to break the correlation between transition information, and a separate target network is set up to suppress instability. At present, training DL models in a centralized manner consumes a lot of time and computation resources, hindering further improving the algorithm performance. Nonetheless, distributed training can facilitate the training process by taking full advantage of parallel servers.

Distributed DL Training

Model parallelism first splits a large DL model into multiple parts and then feeds data samples for training these segmented models in parallel. Training a large DL model generally requires a lot of computation resources, even thousands of CPUs are required to train a large-scale DL model. To solve this problem, distributed GPUs can be utilized for model parallel training. Data parallelism means dividing data into multiple partitions, and then respectively training copies of the model in parallel with their own allocated data samples.

Potential DL Libraries for edge

Coincidentally, a large number of end devices, edge nodes, and cloud data centers, are scattered and envisioned to be connected by big networks.

4. Deep learning Application on edge

Real-time video analytic

In general, DL services are currently deployed in cloud data centers for handling requests, because most DL models are complex and hard to compute their inference results on the side of resource-limited devices. However, such kind of «end-cloud» architecture cannot meet the needs of real-time DL services such as real-time analytics, smart manufacturing, etc. Thus, deploying DL applications on the edge can broaden the application scenarios of DL, especially wconcerningthe low latency characteristic. In the following, we present edge DL applications and highlight their advantages over comparing architectures without edge computing. When the edge is unable to provide the service confidently, the cloud can use its powerful computing power and global knowledge for further processing and assist the edge nodes to update DL models.

Autonomous internet of vehicle

Further, they are expected to optimize complex IoVs systems. In, a framework, dealing with these challenges, is developed to support complex-event learning during intelligent manufacturing, thus facilitating the development of real-time applications on IoT edge devices. IoT edge devices should also be considered. Therefore, caching, communication with heterogeneous IoT devices, and computation offloading can be integrated to break the resource bottleneck.

Intelligent Manufacturing

The popularity of IoTs will bring more and more intelligent applications to home life, such as intelligent lighting control systems, smart televisions, and smart air conditioners. Like use cases, , edge computing is deployed to optimize indoor positioning systems and home intrusion monitoring so that they can get lower latency than using cloud computing as well as better accuracy. Further, the combination of DL and edge computing can make these intelligent services more varied and powerful. If the smart home is enlarged to a community or city, public safety, health data, public facilities, transportation, and other fields can benefit.

Smart home and city

The natural characteristic of geographically distributed data sources in cities requires an edge computing-based paradigm to offer location-awareness and latency-sensitive monitoring and intelligent control. For instance, the hierarchical distributed edge computing architecture can support the integration of massive infrastructure components and services in future smart cities. This architecture can not only support latency-sensitive applications on end devices but also perform slightly latency-tolerant tasks efficiently on edge nodes, while large-scale DL models responsible for deep analysis are hosted on the cloud. Besides, DL can be utilized to orchestrate and schedule infrastructures to achieve holistic load balancing and optimal resource utilization among a region of a city or the whole city.

5. Deep learning inference in edge

DL models are generally deployed in the cloud while end devices just send input data to the cloud and then wait for the

DL inference results. However, the cloud-only inference limits the ubiquitous deployment of DL services. Specifically, it cannot guarantee the delay requirement of real-time services, e. , real-time detection with strict latency demands.

Moreover, for important data sources, data safety and privacy protection should be addressed. To deal with these issues, DL services tend to resort to edge computing. Therefore, DL models should be further customized to fit in the resource-constrained edge, while carefully treating the trade-off between the inference accuracy and the execution latency them.

These approaches can be applied to different kinds of DNNs or be composed to optimize a complex DL model for the edge. Region-of-Interest encoding to focus on target objects in video frames can further reduce the bandwidth consumption and data transmission delay. Though this kind of method can significantly compress the size of model inputs and hence reduce the computation overhead without altering the structure of DL models, it requires a deep understanding of the related application scenario to dig out the potential optimization space.

Parameters pruning can be applied adaptively in model structure optimization as well –. Furthermore, the optimization can be more efficient if across the boundary between algorithm, software,and hardware. Specifically, general hardware is not ready for the irregular computation pattern introduced by model optimization. Therefore, hardware architectures should be designed to work directly for optimized models.

Adaptive parameters pruning in model structure optimization. In, the delay and power consumption of the most advanced DL models are evaluated on the cloud and edge devices, finding that uploading data to the cloud is the bottleneck of current DL servicing methods heterogeneous local processors choosing the best one from candidate partition points according to to delay, energy requirements, etc. Another kind of model segmentation is vertically partitioning particularly for CNs . In contrast to horizontal partition, vertical partition fuses layers and partitions them vertically in a grid fashion, and thus divides CNN layers into independently distributable computation tasks.

As DNNs grow larger and deeper, these costs become more prohibitive for edge devices to runTO BE APPEARED IN IEEE COMMUNICATIONS SURVEYS & TUTORIALS 13 real-time and energy-sensitive DL applications. By additional side branch classifiers, for partial samples, EEoI allows inference to exit early via these branches if with high confidence. For more difficult samplesyouoI will use more or all DNN layers to provide the best predictions. 15, by taking advantage of you, fast and localized inference using shallow portions of DL models at edge devices can be enabled.

By this means, the shallow model on the edge device can quickly perform initial feature extraction and, if confident, can directly give inference results. Otherwise, the additional large DL model deployed in the cloud performs further processing and final inference. Compared to directly offloading DL computation to the cloud, this approach has lower communication costs and can achieve higher inference accuracy than those of the pruned or quantized DL models on edge devices , In addition, since only immediate features rather than the original data are sent to the cloud, it provides better privacy protection.

Nevertheless, you shall not be deemed independent to model optimization and segmentation. DL models for recognition applications in the edge node and to minimize expected end-to-end latency by dynamically adjusting its cache size. , caching internally processed data within CNN layers, to reduce the processing latency of continuous vision applications. Specifically, it divides each video frame into fine-grained regions and searches for similar regions from cached frames in a specific pattern of video motion heuristics.

For the same challenge, FoggyCache first embeds heterogeneous raw input data into feature vectors with generic representation. Sensitive Hashing, a variant of LSH commonly used for indexing high-dimensional data, is proposed to index these vectors for fast and accurate lookup.

Differ from sharing inference results, Mainstream

By exploiting computation sharing of specialized models among applications trained through TL from a common DNN stem, aggregate per-frame compute time can be significantly decreased.

6. Edge computing for deep learning

Extensive deployment of DL services, especially mobile

Low-power IoT edge devices can be used to undertake lightweight DL computation, hence avoiding communication with the cloud, but it still needs to face limited computation resources, memory footprint, and energy consumption. By CMSIS-NN, the memory footprint of NNs on ARM CortexM processor cores can be minimized, and then the DL model can be fitted into IoT devices, meantime achieving normal performance and energy efficiency. About the bottleneck when running CNN layers on mobile GPUs, DeepMon decomposes the matrices used in the CNN layers to accelerate the multiplications between high-dimensional matrices. FPGA-based edge devices can achieve CNN acceleration with arbitrarily sized convolution and reconfigurable pooling, and they perform faster than the state-of-the-art CPU and GPU implementations concerning RNN-based speech recognition applications while achieving higher energy efficiency.

In, the design and setup of an FPGA-based edge platform are developed to admit DL computation offloading from mobile devices. On implementing the FPGA-based edge platform, a wireless router and an FPGA board are combined. Testing this preliminary system with typical vision applications, the FPGA-based edge platform shows its advantages, in terms of both energy consumption and hardware cost, over the GPU’s better energy efficiency. However, the disadvantage of FPGAs lies in that developing efficient DL algorithms on FPGA is unfamiliar to most programmers.

Although tools such as Xilinx SDSoC can greatly reduce the difficulty, at least for now, additional works are still required to transplant the state-of-the-art DL models, programmed for GPUs, into the FPGA platform. Though on-device DL computation, illustrated in Sec. V, can cater to lightweight DL services. Nevertheless, an independent end device still cannot afford intensive DL computation tasks.

, the end device sends its computation requests to the cloud for DL inference results as depicted in Fig. This kind of offloading is straightforward by extricating itself from DL task decomposition and combinatorial problems of resource optimization, which may bring about additional computation cost and scheduling delay, and thus simple to implement. DeepDecision ties together powerful edge nodes with less powerful end devices. An offloading system can be developed to enable online fine-grained partition of a DL task, and determine how to allocate these divided tasks to the end device and the edge node.

As exemplifien , MAUI, capable of adaptively partitioning general computer programs, can conserve an order of magnitude energy by optimizing the task allocation strategies, under the network constraints.

A natural choice of collaboration is the edge performs data pre-processing and preliminary learning, when the DL tasks are offloaded. DL computation . Nevertheless, the hierarchical structure of DNNs can be further excavated for fitting the vertical collaboration. In , all layers of a DNN are profiled on the end device and the edge node in terms of the data and computation characteristics, in order to generate performance prediction models.

Based on these prediction models, wireless conditions and server load levels, the proposed Neurosurgeon evaluates each candidate point in terms of end-to-end latency or mobile energy consumption and partition the DNN at the best one. , which part should be deployed on the end, the edge or the cloud, while achieving best latency and energy consumption of end devices. By taking advantages of EEoI , vertical collaboration can be more adapted. Using an exit point after inference, results of DL tasks, the local device is confident about, can be given without sending any information to the cloud.

For providing more accurate DL inference, the intermediate DNN output will be sent to the cloud for further inference by using additional DNN layers. By this means, the trained DNN models or the whole DL task can be partitioned and allocated to multiple end devices or edge nodes to accelerate DL computation by alleviating the resource cost of each of them. MoDNN, proposed in , executes DL in a local distributed mobile computing system over a Wireless Local Area Network . By the execution parallelism among multiple end devices, the DL computation can be significantly accelerated.

Fused Tile Partitioning orchestrate and maintain DL services automatically. First, where to deploy DL services in edge computing networks should be determined. The RAN controllers deployed at edge nodes are introduced in to collect the data and run DL services, while the network controller, placed in the cloud, orchestrates the operations of the RAN controllers. In this manner, after running and feeding analytics and extract relevant metrics to DL models, these controllers can provide DL services to the users at the network edge.

OpenEI defines each DL algorithm as a four-element tuple ¡Accuracy, Latency, Energy, Memory Footprint¿ to evaluate the Edge DL capability of the target hardware platform. Based on such tuple, OpenEI can select a matched model for a specific edge platform based on different Edge DL capabilities in an online manner. By this means, DL computation can be distributed as needed to manage cost and performance, while also supporting other practical situations, such as engine heterogeneity and discontinuous operations.

Nevertheless, these pioneer works are not ready to natively support valuable and also challenging features discussed in

Section VI-B, such as computation offloading and collaboration, which still calls for further development. Throughout the process of selecting appropriate edge hardware and associated software stacks for deploying different kinds of Edge DL services, it is necessary to evaluate their performance. To evaluate the end-to-end performance of Edge DL services, not only the edge computing architecture but also its combination with end devices and the cloud shall be established, such as openLEON and CAVBench particularly for vehicular scenarios. Furthermore, simulations of the control panel of managing DL services are still not dabbled.

7. Deep Learning training at edge

Distributed training at the edge can be traced back to the work of , where a decentralized Stochastic Gradient

17, one solution is that each end device trains a model based on local data, and then these model updates are aggregated at edge nodes. Another one is edge nodes train their own local models, and their model updates are exchanged and refined for constructing a global model. Though largescale distributed training at edge evades transmitting bulky raw dataset to the cloud, the communication cost for gradients exchanging between edge devices is inevitably introduced. Besides, in practical, edge devices may suffer from higher latency, lower transmission rate and intermittent connections, and therefore further hindering the gradients exchanging between DL models belong to different edge devices.

Most of the gradient exchanges are redundant, and hence updated gradients can be compressed to cut down the communication cost while preserving the training accuracy . First, DGC stipulates that only important gradients are exchanged, i. With the same purpose of reducing the communication cost of synchronizing gradients and parameters during distributed training, two mechanisms can be combined together . The first is transmitting only important gradients by taking advantage of sparse training gradients .

Hidden weights are maintained to record times of a gradient coordinate participating in gradient synchronization, and gradient coordinates with large hidden weight value are deemed as important gradients and will be more likely be selected in the next round training. Particularly, when training a large DL model, exchanging corresponded model updates may consume more resources. Using an online version of KD can reduce such kind of communication cost . Different from – , in the scenario of , training data are trained at edge nodes as well as be uploaded to the cloud for further data analysis.

Hence, Laplace noises are added to these possibly exposed training data for enhancing the training data privacy assurance. In Section VII-A, the holistic network architecture is explicitly separated, specifically, training is limited at the end devices or the edge nodes independently instead of among both of them. The data need to be uploaded in FL is only the updated DL model. Further, secure aggregation and differential privacy , which are useful in avoiding the disclosure of privacy-sensitive data contained in local updates, can be applied naturally.

In FL, raw training data are not required to be uploaded, thus largely reducing the communication cost. Supposing the DL model size is large enough, uploading updates, such as model weights, from edge devices to the central server may also consume nonnegligible communication resources. FedPAQ simultaneously incorporates these features and provides near-optimal theoretical guarantees for both strongly convex and non-convex loss functions, while empirically demonstrating the communication-computation tradeoff. Different from only investigating on reducing communication cost on the uplink, takes both server-to-device and device-to-server communication into consideration.

Naturally, such kind of model compression is lossy, and unlike on the uplink Training data at edge devices may be distributed non-uniformly –. For more powerful edge devices, ADSP lets them continue training while committing model aggregation at strategically decided intervals. For general cases, based on the deduced convergence bound for distributed learning with nonIID data distributions, the aggregation frequency under given resource budgets among all participating devices can be optimized with theoretical guarantees . Astraea reduces 92% communication traffic by designing a mediator-based multi-client rescheduling strategy.

On the one hand, Astraea leverages data augmentation to alleviate the defect of nonuniformly distributed training data. , minimizing the KullbackLeibler divergence between mediator’s data distribution and uniform distribution.

Takes advantage of over-the-air computation – , of which the principle is to explore the superposition property of a wireless multiple-access channel to compute the desired function by the concurrent transmission of multiple edge devices. During the transmission, concurrent analog signals from edge devices can be naturally weighed by channel coefficients. Then the server only needs to superpose these reshaped weights as the aggregation results, nonetheless, without other aggregation operations. When FL deploys the same neural network model to heterogeneous edge devices, devices with weak computing power.

n 10 clients n Leverage blockchain to exchange and verify model updates of local training Learning completion latency

The number of devices involved in FL is usually large, ranging from hundreds to millions. Simply minimizing the average loss in such a large network may be not suited for the required model performance on some devices. In fact, although the average accuracy under vanilla FL is high, the model accuracy required for individual devices may not be guaranteed. Adaptively minimizing q-FFL avoids the burden of handcrafting fairness constraints, and can adjust the goal according to the required fairness dynamically, achieving the effect of reducing the variance of accuracy distribution among participated devices.

In vanilla FL, local data samples are processed on each edge device. Such a manner can prevent the devices from revealing private data to the server. However, the server also should not trust edge devices completely, since devices with abnormal behavior can forge or poison their training data, which results in worthless model updates, and hence harming the global model. To make FL capable of tolerating a small number of devices training on the poisoned dataset, robust federated optimization defines a trimmed mean operation.

By filtering out not only the the values produced by poisoned devices but also the natural outliers in the normal devices, robust aggregation protecting the global model from data poisoning is achieved.

8. Deep Learning for optimization edge

By this means, the popular content at the core network is estimated by applying feature-based collaborative filtering to the popularity matrix . For the second purpose, when using DNNs to optimize the strategy of edge caching, online heavy computation iterations can be avoided by offline training. A DNN, which consists of an encoder for data regularization and a followed hidden layer, can be trained with solutions generated by optimal or heuristic algorithms and be deployed to determine the cache policy , hence avoiding online optimization iterations. Similarly, in , inspired by the fact that the output of optimization problem about partial cache refreshing has some patterns, an MLP is trained for accepting the current content popularity and the last content placement probability as input to generate the cache refresh policy.

As illustrated in , the complexity of optimization algorithms can be transferred to the training of DNNs, and thus breaking the practical limitation of employing them. DNN-based methods are only available when optimization algorithms for the original caching problem exist. Therefore, the performance of DNN-based methods bounds by fixed optimization algorithms and is not self-adapted. , the DNN itself does not deal with the whole optimization problem.

Different from these DNNsbased edge caching, DRL can exploit the context of users and networks and take adaptive strategies for maximizing the long-term caching performance as the main body of the optimization method. To solve this kind of task offloading problem is NP-hard , since at least combination optimization of communication and computing resources along with the contention of edge devices is required. DNN with the composite state of the edge computing network as the input, and the offloading decision as the output. By this means, optimal solutions may not require to be solved online avoiding belated offloading decision making, and the computation complexity can be transferred to DL training.

Further, a particular offloading scenario with respect to

In , to maximize offloading utilities, the authors first quantify the influence of various communication modes on the task offloading performance and accordingly propose applying DQL to online select the optimal target edge node and transmission mode. For optimizing the total offloading cost, a DRL agent that modifies Dueling- and Double-DQL can allocate edge computation and bandwidth resources for end devices. Besides, offloading reliability should also be concerned. The coding rate, by which transmitting the data, is crucial to make the offloading meet the required reliability level.

Hence, in, effects of the coding block-length are investigated and an MDP concerning resource allocation is formulated and then solved by DQL, in order to improve the average offloading reliability.

Resource-saving TO BE APPEARED IN IEEE COMMUNICATIONS SURVEYS & TUTORIALS

Compared to DQL, the experiment results indicate that Double-DQL can save more energy and achieve higher training efficiency. Nonetheless, the action space of DQL-based approaches may increase rapidly with increasing edge devices. IoT edge environments powered by Energy Harvesting is investigated in , . In EH environments, the energy harvesting makes the offloading problem more complicated, since IoT edge devices can harvest energy from ambient radio-frequency signals.

Freely letting DRL agents take over the whole process of computation offloading may lead to huge computational complexity. By only using DRL to deal with the NP-hard offloading decision problem rather than both, the action space of the DRL agent is narrowed, and the offloading performance is not impaired as well since the resource allocation problem is solved optimally. Edge DL services are envisioned to be deployed on BSs in cellular networks, as implemented in . DL-based methods can be used to assist the smooth transition of connections between devices and edge nodes.

To minimize energy consumption per bit, in, the optimal device association strategy is approximated by a DNN. Meanwhile, a digital twin of network environments is established at the central server for training this DNN off-line. To minimize the interruptions of a mobile device moving from an edge node to the next one throughout its moving trajectory, the MLP can be used to predict available edge nodes at a given location and time . Moreover, determining the best edge node, with which the mobile device should associate, still needs to evaluate the cost for the interaction between the mobile device and each edge node.

At last, based on the capability of predicting available edge nodes along with corresponding potential cost, the mobile device can associate with the best edge node, and hence the possibility of disruption is minimized. Aiming at minimizing long-term system power consumption in the communication scenario with multiple modes , i. Certainly, security protection generally requires additional energy consumption and the overhead of both computation and communication. Consequently, each edge device shall optimize its defense strategies, viz.

, choosing the transmit power, channel and time, without violating its resource limitation. The optimization is challenging since it is hard to estimate the attack model and the dynamic model of edge computing networks.

DRL-based security solutions can provide secure offloading

In general, SDN is designed for separating the control plane from the data plane, and thus allowing the operation over the whole network with a global view. In , an SDNenabled edge computing network catering for smart cities is investigated. To improve the servicing performance of this prototype network, DQL is deployed in its control plane to orchestrate networking, caching, and computing resources. Edge computing can empower IoT systems with more computation-intensive and delay-sensitive services but also raises challenges for efficient management and synergy of storage, computation, and communication resources.

For minimizing the average end-to-end servicing delay, policy-gradientbased DRL combined with AC architecture can deal with the assignment of edge nodes, the decision about whether to store the requesting content or not, the choice of the edge node performing the computation tasks and the allocation of computation resources . Similar to the consideration of integrating networking, caching and computing as in , Double-Dueling DQL with more robust performance, can be used to orchestrate available resources to improve the performance of future IoVs . By using such multi-timescale DRL, issues about both immediate impacts of the mobility and the unbearable large action space in the resource allocation optimization are solved.

Conclusion

Edge computing and deep learning, as fundamental techniques in artificial intelligence, are likely to help each other. This survey introduced and discussed numerous applicable scenarios as well as fundamental enabling strategies for edge intelligence and intelligent edge in depth. In summary, the major challenge of extending DL from the cloud to the network edge is how to conceive and create edge computing architecture to obtain the optimum performance of DL training and inference under the numerous restrictions of networking, communication, processing power, and energy consumption.

Reference

“Cloud Extending to Where the Things Are: Fog Computing and the Internet of Things.” [Online]. http://www.cisco.com/c/dam/en us/solutions/trends/iot/docs/computing-overview.pdf

[2] “Forecast and Methodology for the Cisco Global Cloud Index.”

[Online]. https://www.cisco.com/c/en/us/solutions/collateral/ service-provider/global-cloud-index-gci/white-paper-c11-738085.html

[3] “To offload or not to unload?” M. V. Barbera, S. Kosta, A. Mei, et al.

“The bandwidth and energy costs of mobile cloud computing,” in IEEE Conference on Computer Communications (INFOCOM 2013), pp. 1285-1293, 2013.

[4] “Quantifying the Impact of Edge Computing on Mobile Applications,” W. Hu, Y. Gao, K. Ha, et al., in Proc. 7th ACM SIGOPS AsiaPacific Workshop Syst. (APSys 2016), 2016, pp. 1-8.

[5] “Introductory Technical White Paper on Mobile-Edge Computing,” https://portal.etsi.org/Portals/0/TBpages/MEC/ Docs/Mobile-edge Computing – Introductory Technical White Paper V1 percent 2018-09-14.pdf